Stop Installing Runtimes on Your Laptop (AI Era Edition)

Context

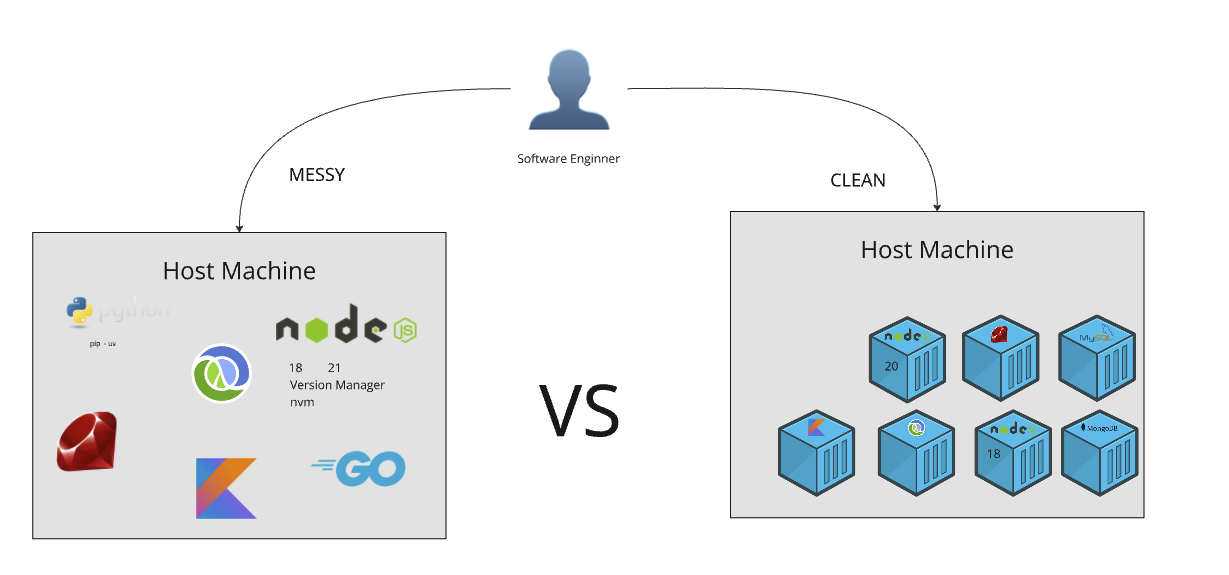

In 2025, most engineers touch or will touch multiple different stacks in the same week—Python/CUDA for LLMs, TypeScript/Next.js for UIs, Go/Rust for services, maybe a dash of Clojure for data flows. Installing each toolchain + its version manager (nvm, n, pyenv/pip/uv, rbenv, sdkman, cargo, composer…) on the host machine is an anti-pattern. It inflates onboarding time, expands your supply-chain blast radius, and guarantees drift between dev, CI, and prod.

The fix is boring and effective: project-scoped, declarative containerized dev environments. You keep your host clean (just Docker/Podman), and each repo defines its own world.

Why this matters more in the AI wave

- Polyglot by default. AI workflows force you across languages and OS deps (CUDA, MKL, libstdc++, OpenSSL). Pin them in containers; stop mutating your OS.

- Reproducibility is a feature. Agents, fine-tuning, and inference break when your laptop differs from CI/prod. Bake the env once; reuse it everywhere.

- Security & supply chain. Fewer global installers on the host = fewer privilege boundaries crossed and fewer places for malicious packages to land.

“But containers run as root.”

They don’t have to. Use non-root users inside images or run Docker rootless. All the examples below set a non-root USER.

My bias (context)

I spent years in production-first orgs (e.g., Nubank), where dev/prod parity and Kubernetes discipline beat “works on my machine.” I’ve watched too many courses still teach host installs. It’s time to normalize the cleaner default.

Copy-paste Cookbook: Six Minimal Dockerfiles

Each Dockerfile assumes:

- You only have Docker installed on your host.

- You’ll bind-mount your source (for live reload where applicable) or bake source into an image for CI/preview.

- A non-root user runs your process.

Tip: name the files like Dockerfile.php, Dockerfile.clj, etc., and adjust the docker build command accordingly.

1) PHP (CLI server + Composer, no host PHP)

Structure

1

2

3

4

/app

├─ composer.json

├─ composer.lock (optional)

└─ public/index.php

Dockerfile.php

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

# --- deps build stage (composer only) ---

FROM composer:2 AS deps

WORKDIR /app

COPY composer.json composer.lock* ./

RUN composer install --no-dev --prefer-dist --no-interaction --no-progress || true

# --- runtime ---

FROM php:8.3-cli-alpine

# Create non-root user

RUN adduser -D -u 10001 appuser

WORKDIR /app

# Copy deps and app

COPY --from=deps /app/vendor /app/vendor

COPY . .

USER appuser

EXPOSE 8080

# PHP built-in server serving /public

CMD ["php", "-S", "0.0.0.0:8080", "-t", "public"]

Run

1

2

docker build -f Dockerfile.php -t php-app .

docker run --rm -p 8080:8080 -v "$PWD":/app php-app

2) Clojure (tools.deps, no host JDK)

Assumes you use deps.edn with an alias :run that starts your app (e.g., Ring/Jetty).

Structure

1

2

3

/app

├─ deps.edn (define :run and :test aliases)

└─ src/...

Dockerfile.clj

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

FROM clojure:temurin-21-tools-deps

# Create non-root user

RUN useradd -m -u 10001 appuser

WORKDIR /app

# Cache deps

COPY deps.edn ./

RUN clojure -P

# Copy source

COPY src ./src

COPY resources ./resources 2>/dev/null || true

USER appuser

EXPOSE 3000

CMD ["clojure", "-M:run"]

Run

1

2

docker build -f Dockerfile.clj -t clj-app .

docker run --rm -p 3000:3000 -v "$PWD":/app clj-app

Hot reload: use

tools.namespaceor your preferred watcher inside the container. You can also run a nREPL in the container and jack in from your editor.

3) Next.js (Node 20, dev ready, no host Node/NPM)

Structure

1

2

3

4

5

/app

├─ package.json

├─ package-lock.json (or pnpm-lock.yaml / yarn.lock)

├─ next.config.js (optional)

└─ app/ or pages/

Dockerfile.next

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

FROM node:20-bookworm

# Create non-root user

RUN useradd -m -u 10001 appuser

WORKDIR /app

# Install deps (cache-friendly)

COPY package*.json ./

RUN npm ci

# Copy the rest of the project

COPY . .

ENV NEXT_TELEMETRY_DISABLED=1

ENV PORT=3000

EXPOSE 3000

USER appuser

# Dev server bound to 0.0.0.0 for container networking

CMD ["npm", "run", "dev", "--", "-p", "3000", "-H", "0.0.0.0"]

Run

1

2

docker build -f Dockerfile.next -t next-app .

docker run --rm -p 3000:3000 -v "$PWD":/app next-app

If file watching is flaky on macOS/Windows, set

CHOKIDAR_USEPOLLING=1in the Dockerfile ordocker run -e.

Production variant (optional)

- Build in the same image and swap

CMDtonpm run startafternpm run build, or use a multistage to copy.nextinto a slim runtime.

4) Python (FastAPI/Uvicorn example, no host Python/pip)

Structure

1

2

3

/app

├─ requirements.txt (e.g., fastapi==0.112.0 uvicorn[standard]==0.30.0)

└─ app.py (or app/__init__.py with `app = FastAPI()`)

Dockerfile.py

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

FROM python:3.11-slim

# System basics (optional build deps kept minimal)

RUN adduser --disabled-password --gecos "" --uid 10001 appuser

WORKDIR /app

ENV PYTHONDONTWRITEBYTECODE=1

ENV PYTHONUNBUFFERED=1

# Install Python deps

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Copy app

COPY . .

USER appuser

EXPOSE 8000

# For a file named app.py with FastAPI() instance named "app"

CMD ["python", "-m", "uvicorn", "app:app", "--host", "0.0.0.0", "--port", "8000", "--reload"]

Run

1

2

docker build -f Dockerfile.py -t py-app .

docker run --rm -p 8000:8000 -v "$PWD":/app py-app

5) Rust (multi-stage, tiny runtime, no host Rust/cargo)

Structure

1

2

3

/app

├─ Cargo.toml

└─ src/main.rs

Dockerfile.rs

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

# --- builder ---

FROM rust:1.80-bookworm AS builder

WORKDIR /app

# Cache deps

COPY Cargo.toml Cargo.lock* ./

RUN mkdir -p src && echo "fn main() { println!(\"placeholder\"); }" > src/main.rs

RUN cargo build --release

# Now copy real sources and rebuild

COPY src ./src

RUN cargo build --release

# --- runtime ---

FROM debian:bookworm-slim

# Create non-root user

RUN useradd -m -u 10001 appuser

WORKDIR /app

COPY --from=builder /app/target/release/* /usr/local/bin/

USER appuser

EXPOSE 8080

# Replace 'myapp' with your binary name from Cargo.toml [[bin]]

CMD ["myapp"]

Run

1

2

docker build -f Dockerfile.rs -t rs-app .

docker run --rm -p 8080:8080 rs-app

Want a near-zero runtime image? Build statically (musl) and use

gcr.io/distroless/static-debian12:nonroot. That’s one line change in the runtime stage once you compile to a static binary.

6) Go (multi-stage, distroless, no host Go)

Structure

1

2

3

/app

├─ go.mod

└─ main.go

Dockerfile.go

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# --- builder ---

FROM golang:1.22-bookworm AS builder

WORKDIR /app

COPY go.mod go.sum* ./

RUN go mod download

COPY . .

# static for tiny runtime

ENV CGO_ENABLED=0 GOOS=linux

RUN go build -trimpath -ldflags="-s -w" -o /bin/app

# --- runtime (distroless, non-root) ---

FROM gcr.io/distroless/static-debian12:nonroot

USER nonroot:nonroot

COPY --from=builder /bin/app /app

EXPOSE 8080

ENTRYPOINT ["/app"]

Run

1

2

docker build -f Dockerfile.go -t go-app .

docker run --rm -p 8080:8080 go-app

Notes that save you pain

- Non-root everywhere. Each Dockerfile sets

USERto limit blast radius. - Hot reload in containers works when the watcher runs inside the container and you bind-mount your source (

-v "$PWD":/app). - macOS performance: heavy I/O on bind mounts can be slow. Prefer named volumes for package caches (e.g., mount

~/.npmor~/.cache/pipas a Docker volume) or move to remote dev (Codespaces/K8s workspace) for big repos. - Dev ≈ CI ≈ Prod: build the same image in CI that you run locally. That’s how you delete “works on my machine” from your vocabulary.

Conclusion

In the AI era, environment determinism is table stakes. Keep your host clean. Put each project’s reality in code (Dockerfile/Dev Container). Your onboarding shrinks, your incidents shrink, and your laptop stops pretending to be every runtime under the sun.

If you want, I can add a devcontainer.json for each stack so VS Code/Cursor opens these exactly the same way on any machine (or in Codespaces/K8s).